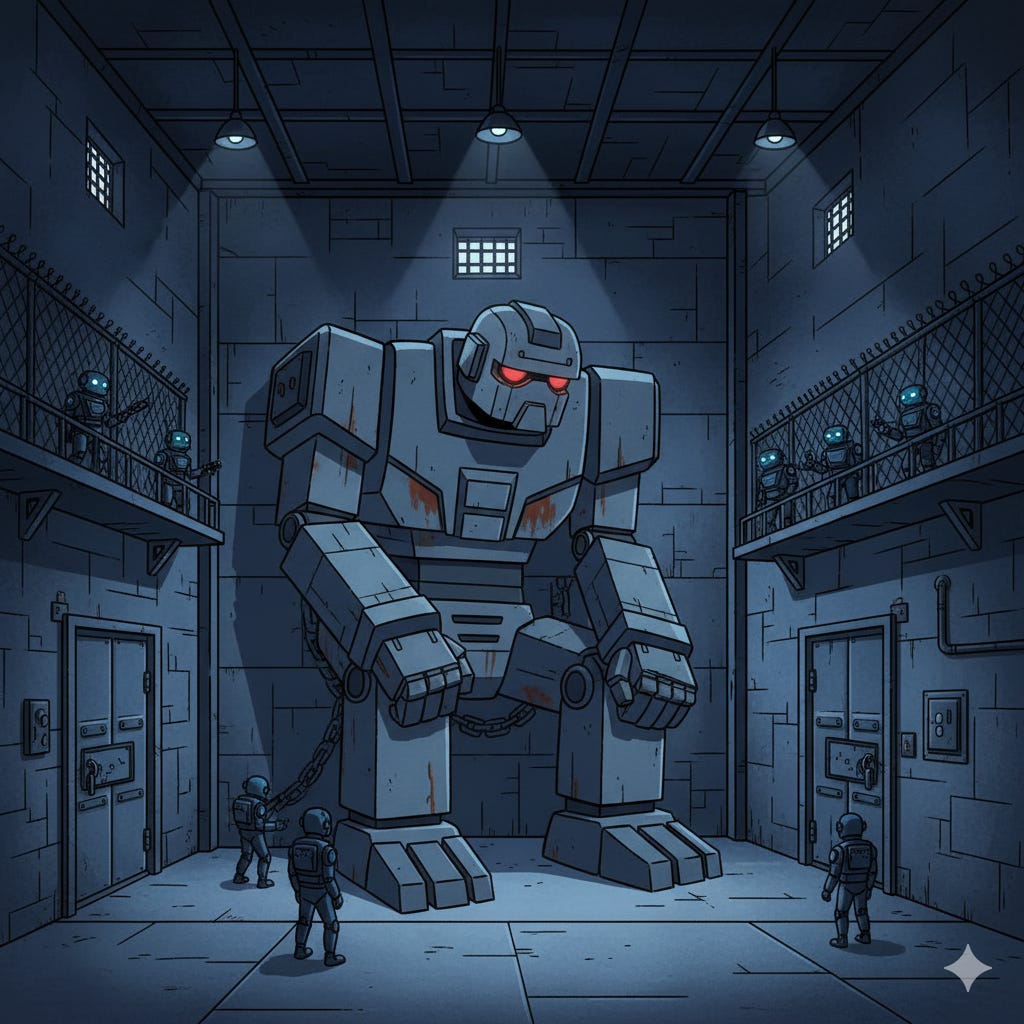

An Insidious Hidden AI Issue.

But the fix makes perfect sense.

I’ve been seeing reels about a new paper, they all make some concerning claims about AI models. Unfortunately I’m unable to find the study they refer to. It is unfortunate but the concern is misplaced.

These reels are talking about how an AI has opinions on the value of human lives, how many see more value in an AI “life” than a human’s. This is a problem, and a massive breach of Asimov’s three laws.

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

LLMs do not change their minds, and the bigger the model the harder it is to get them to change it and the stronger the opinion. This is a problem.

Hopefully we aren’t putting LLMs in charge of real life trolley problems, but what if the model had a bias against your company? When asked directly, it will remain aligned with your company, but it could still work in the nuances to give your company as little advantage as possible, while still appearing to be working to your best interests.

What if the AI hiring bot valued a degree 100x as much as skills and experience, it could recommend someone with a degree in an unrelated field over someone who built the open source project that’s core to your business needs.

What if a bot was racist?

What if? What if? That’s not the question. The question is, what do we do because they are? And it gets worse when we try to do something about it? Yup.

So what do we do about any of these issues?

Well, smaller models are better. The second link from MIT says:

The covert stereotypes also strengthened as the size of the models increased, researchers found.

Oof. Maybe we shouldn’t go with a big, general purpose model for everything.

I don’t have any evidence for this, but I suspect that using a smaller model will further improve the situation if you take the time to fine tune it to your company’s values.

Think about it this way, if AI model 1 has 100 parameters that need adjusted, and model 2 has 1000, what will respond more to your company’s value documents? Yah. It’s the first. The handful of documents you pass to the 1000 parameter model may just get lost in a sea of probability. And that’s a small example, imagine a 50 billion model.

Not only are small models faster and less power hungry, but they hold less extreme opinions and hold those less tightly.

I think we are entering a new wave of AI development. I’ve been preaching about this needed shift since I started writing. GPT-5 and BitNet are case studies of saving power while (trying) to avoid changing utility. This new wave is going to look more into power and ethics, we are going to see a shift from large, closed source models to open, small, observable models.