Installing GPT4ALL and why you should-Free July 30

I don’t typically use GPT4All, but there are definite reasons you should.

GPT4All is an all in one local LLM provider, server and API server as well. This means, that by installing this one program, you can chat with a LLM, including local documents. Honestly, 90% or more of what people do. Here’s the link.

That said, it’s not the most powerful option. I personally prefer Ollama and AnythingLLM for that task, but there are reasons to choose GPT4all. Let’s take a look at them.

Really, my one complaint is that the GPT4All UI feels laggy. I think the main reason is there is no loading screen, the UI pops up right away but is unusable for a moment. Meanwhile the other frontends, and most notably AnythingLLM, have the window pop up with a splash screen. I suspect it takes the same amount of time to load, but the splash screen is a powerful tool.

When to choose GPT4All over other local options

When you will only be chatting or retrieving local documents

I’m going to say this quite often, but the simplicity of GPT4All is really it’s best feature. And that’s saying a lot. LLM chat interfaces shouldn’t be that difficult, and they aren’t. But when you have multiple moving parts, with their own dependencies etc. It can make things complicated.

GPT4All does away with all of that. You don’t need to learn HuggingFace commands, remember to start Ollama, or anything. It’s all right there. The interface takes care of all of that. Once installed, it’s as easy as a online provider if we are completely honest. Point it at the docs you need looked at and away you go!

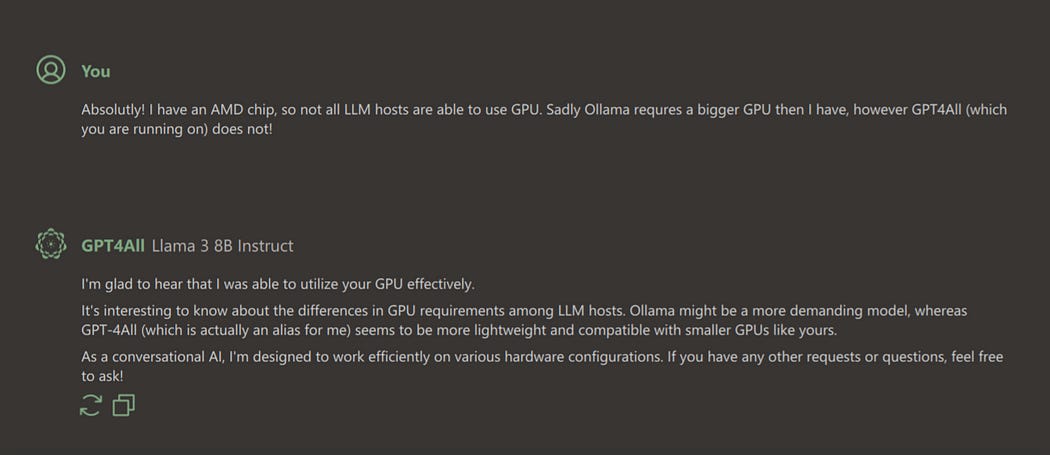

You have a GPU that doesn’t support other providers

My GPU is a hint small for Ollama support. Prior to this I had a GPU that was even smaller. Yes, you can use CPU for that, but it’s slow in comparison to GPU.

GPT4All works on both GPUs. It’s great. My laptop runs faster, cooler and I get responses faster.

You aren’t technical (or that kind of technical)

I get it, you have your own skillset. I have worked with lots of experts in various fields, highly competent people. Just not great at AI, I get it. I’m the same way, we all are. I’m just skilled in AI. With GPT4All you don’t need to have the same skill.

When to absolutely not use GPT4All

You need agents, MCP servers or to browse the internet.

GPT4All does not natively have this ability. This is unfortunate, but I believe there are ways around this. If you need any of these, I’d start with AnythingLLM.

If you think AnythingLLM is more your cup of tea, I can recommend this tutorial that I contributed to while at IBM.

Open Source AI Workshop - Open Source AI Workshop

Learn how to leverage Open Source AI

Installing GPT4All

(All images from the nomic website or GPT4All UI and provided for instructional reasons)

Start by navigating to the GPT4All URL. You’ll see a screen that looks something like this.

Once the download is complete, click the exe file. The installer will pop up.

Other than Accepting the license, you can just next your way through everything. It’s a generic installer, and this program does not have too many options.

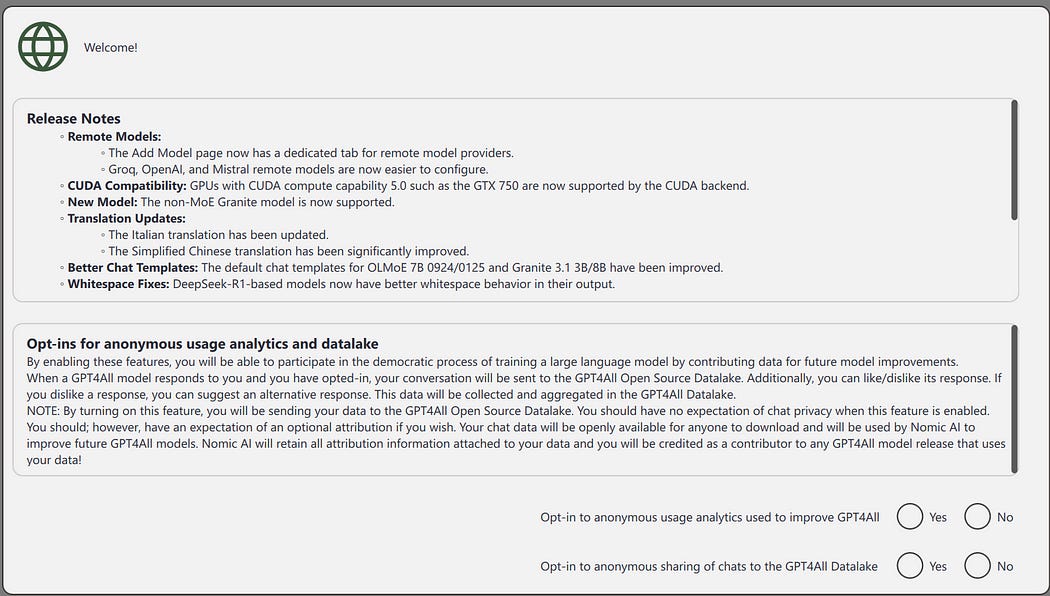

Once installation is done, you can run the program from your start menu. On the first startup you’ll see a slash screen for opt-ins. Both of these are optional and you can change them later.

Once you are done with that, you’ll want to go to the find models option. For me it’s prominently displayed along the right hand side of the screen.

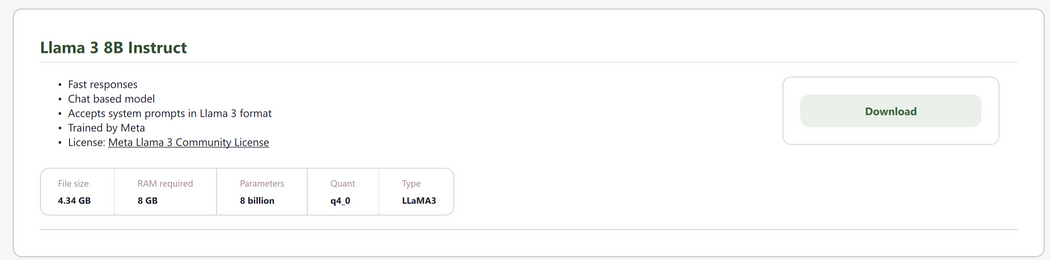

Find a model you want. If you are a paid subscriber to my substack, you can get a summary of my main thoughts here.

AI platforms and use cases. Subscriber Special

This document is an attempt to provide a summary of common terms and tools in Generative AI. It is designed for people…

markmcguirequantum.substack.com

If you are planning on just chatting, Llama seems to be the best. Hit download on your preferred model.

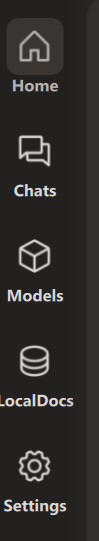

Now, while the model is downloading, let’s make sure your settings are the way you want. Along the left hand side of the UI, you’ll see these options. Go to Settings, the gear at the bottom.

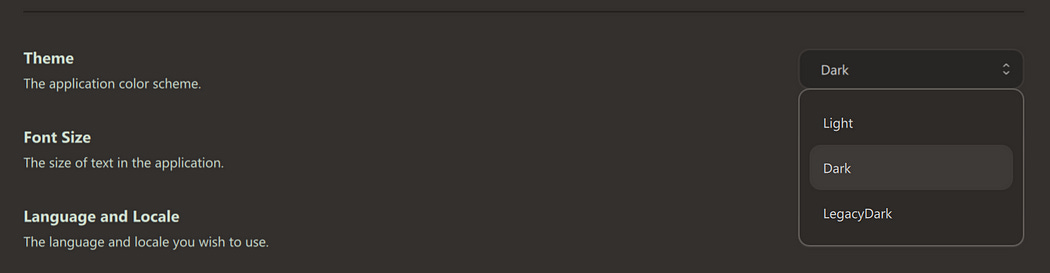

I personally like dark mode, so I change that right way since light is the default.

One of the reason for this tutorial is the GPU issue, check the device for this and make sure you have the right one. For my current setup, I have “Default”, “CPU” and this “Vulkan” option. Default seems to be the same as CPU. Since I want to use GPU, I go with that last option.

One thing to note, I installed this on a computer once with a CUDA enabled device. There were two GPU options, one did not work, it has something to do with CUDA versions I believe, but if one doesn’t work try the other.

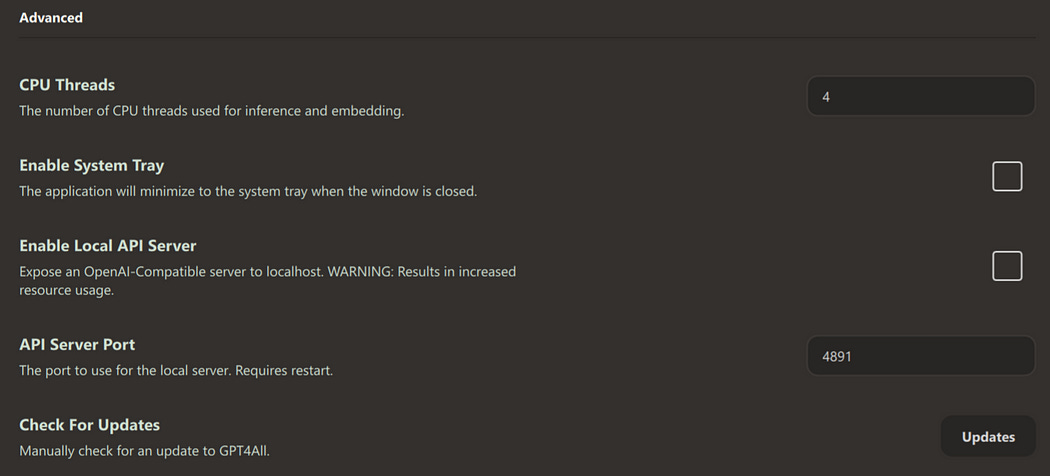

If you don’t plan on doing anything programmatically, you shouldn’t need anything else here. Otherwise, you may need to enable the local API server. Good luck!